Introduction

I decided to work towards making the MUD work on an event-sourcing model, or CQRS. CQRS stands for something like Command Query Responsibility Separation. It just a fancy way to say that the manner in which we inject data into the system (in this case, as events) can be different from the way in which one reads the state (such as a Redis query).

This change in technical direction requires that I create an event for every kind of state mutation I plan to allow. I’ve never implemented CQRS, so I expect to learn a few things.

Currently, to seed the data, I run a Yaml-to-Redis converted I wrote. This is a generic facility that will basically take any YAML and mirror it in Redis. Whereas Redis doesn’t support hierarchical values, I simulate this by extending the key names as a kind of path. At the top level we simply have values, lists, and maps. Map entries are scalar.

90) "monsters:monster:blob"

91) "monsters:monster:golem"

92) "items:armor:medium armor"

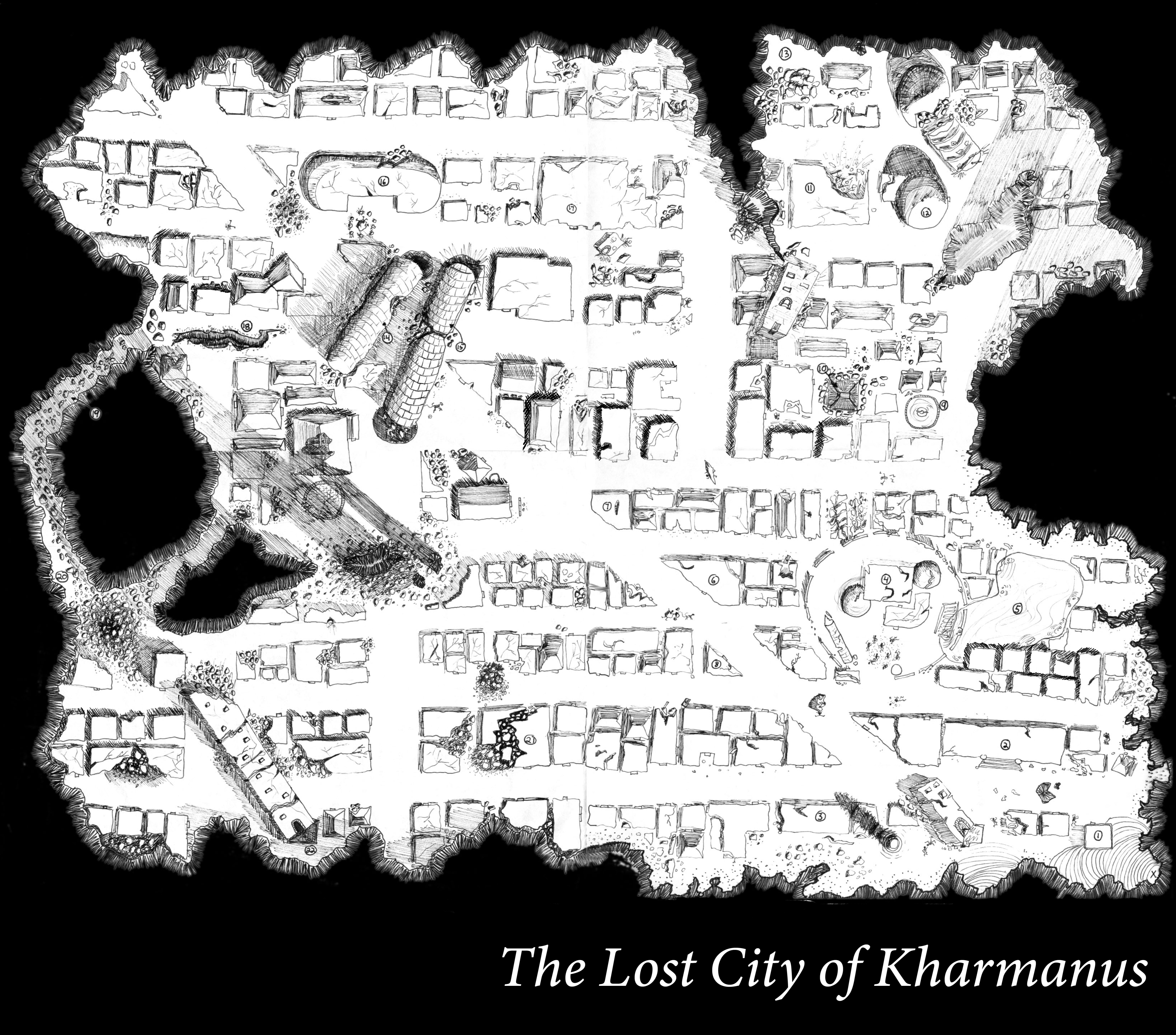

93) "places:population-center:Karpus"

94) "places:population-centers"

But this won’t do in a system based on the event-source model. I can’t just mutate the state all at once like that. It will invalidate principles of event-sourcing like being able to replay, and, during replay, have the system process an event with the correct starting state.

For instance, if a character starts out at level 1 with 10hp, and we reply a set of events created during an adventure, the character might be level 2 at the end of those events. If we choose to replay and ignore the state changes of the character (such as them starting at level 1), then the events will be handled and the system will think the character started at level 2. So, spawn character is an important event as it is an event which establishes a new character and interactions with that character should start from that point.

It’s possible I could create an “event” such as “load-yaml-file” and the event has the yaml file contents, but I think the lack of granularity might prove unworkable.

Instead of injecting a map for a monster:

Monster::Gargoyle

Hit-dice: 4d6

I’ll say something like “add-update-monster”, and this event should have all relevant data related to adding or updating an existing monster state.

This way, as the event makes its way across the system, components have a signal to do something or ignore the message. This is the replay ability to a CQRS system.

Redis Streams

Redis has a stream feature which is ideal for modeling these events. For now, I’m going to use the simple XREAD function, which allows any client to digest every message in the stream.

I did some benchmarking

Hardware:

- Redis runs on a dedicated linux box (Intel Core i5-3570K CPU @ 3.40GHz)

- stream writer/reader programs to send/receive data from Redis (AMD Ryzen 9 3900X 12-Core Processor)

When source/sink are running on my Windows desktop, running in a WSL2 Debian container, we get:

~ 770.1650232441272mps

But the CPU was more or less idle on both Windows and Redis. So, my network must be slow. I repeated the test by running the writer/reader apps on the Redis Linux box and results were 10x faster:

When I the run source/sink programs on the redis hardware, eliminating the network, the results are much better:

~ 7011.405656912864mps~

I could not get single-threaded writes to be much faster than that, but I did find a big in the reader and when I fixed it, I was able to received 100-200k messages/sev, which is great.

Now that I have a technical foundation well-understood, it’s time to start defining some mutation messages and modeling how they’ll appear on the stream.

Based on work so far, I have these events to define first:

- UserInputReceived

- UserOutputSent

- MonsterAddUpdate

- ItemAddUpdate

- PlaceAddUpdate

This is probably suboptimal naming, but I’m still new to this, so I expect to refine the event taxonomy over time as I see more events.

More on that later.